Instagram says it’s combating racism and abuse on its platform with the global rollout of new features and tweaks.

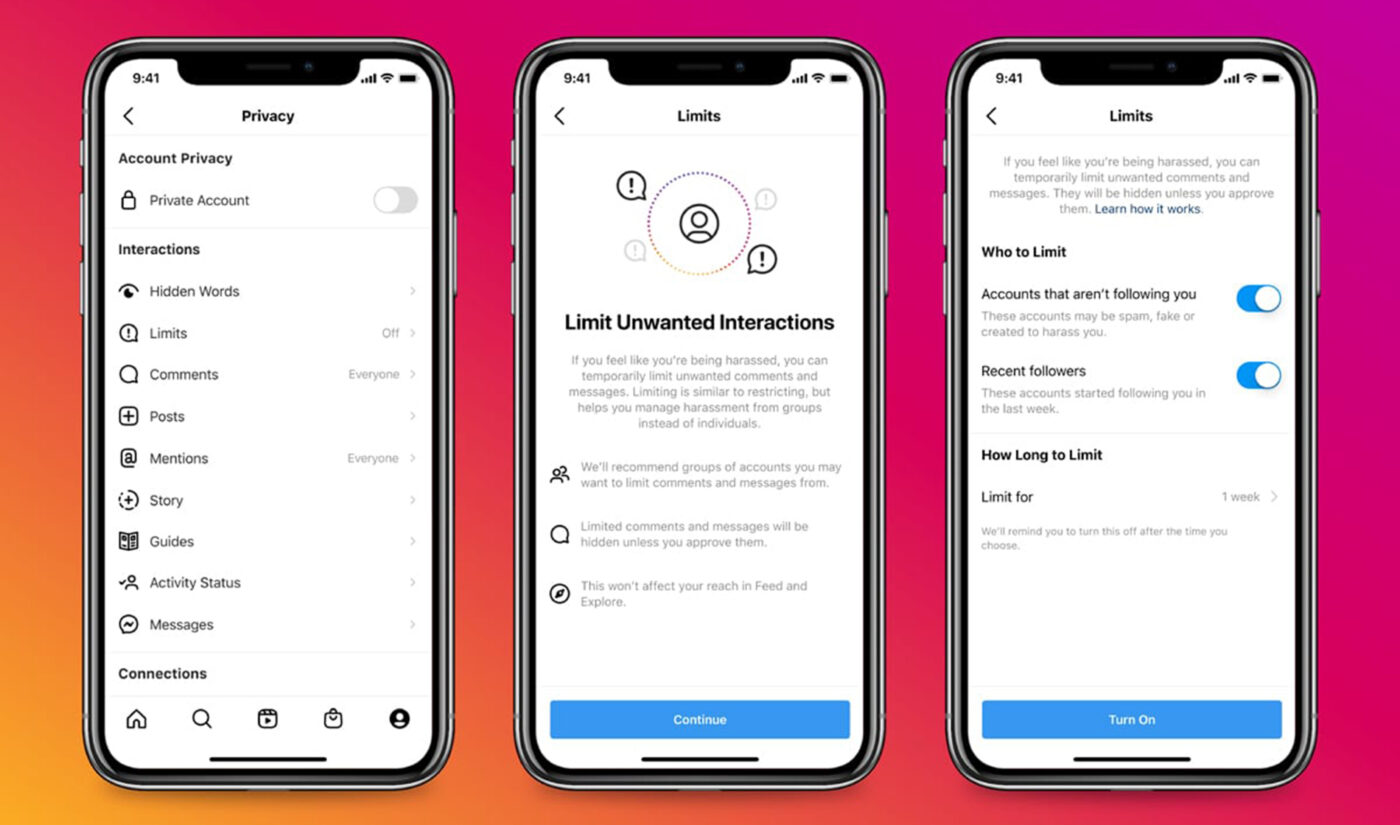

First up is Limits, which, as its name suggests, allows users to automatically hide comments and direct-message requests from people who don’t follow them, or who just followed them recently.

The feature is intended to be toggled on when users “experience or anticipate a rush of abusive comments and DMs,” Instagram head Adam Mosseri said in an official blog post about the new tools.

Subscribe to get the latest creator news

Mosseri added that Instagram developed this feature because it’s aware that creators and public figures tend to deal with “sudden spikes” of comments and DM requests from people “who simply pile on in the moment.”

“We saw this after the recent Euro 2020 final, which result in a significant–and unacceptable–spike in racist abuse towards players,” Mosseri explained. (For those not aware, that soccer final took place July 11, and saw England lose to Italy. Immediately afterward, the English team’s Black players received torrents of abuse across social media platforms. In the past month, at least five people in the U.K. have been arrested for racist, threatening posts they allegedly made online.)

Giving tools to ppl isn’t enough. We must approach the issue from both sides: continuing to improve our technology to proactively protect people from racism; and empowering ppl with tools like Limits & Hidden Words. We won’t rely on one or the other; working on both is critical.

— Adam Mosseri 😷 (@mosseri) August 11, 2021

Mosseri said it’s letting users toggle Limits off and on because feedback from creators indicated they don’t want to permanently disable comments and DMs from non-followers; they only want to be able to hide them in crucial moments.

Limits is live to every Instagram user now. Mosseri said that Instagram is currently developing ways to autodetect when accounts may experience spikes, and subsequently prompt users to turn Limits on.

More warnings for commenters, more filters for creators

The other two updates Instagram’s rolling today are also aimed at combating harassment in comments and DMs.

On the user side, the platform is amping up its offensive comment warning system. This system, introduced in 2019, prescans not-yet-posted comments and serves their writers with popups when offensive and/or potentially guideline-violating content is detected within them.

Mosseri said that up till now, Instagram has served users a mild warning the first time they try to post a potentially abusive comment; it only served “stronger” warnings when they tried to post abusive comments multiple times.

“Now, rather than waiting for the second or third comment, we’ll show this stronger message the first time,” he said.

Thus far, these warnings have been effective, Mosseri said. In the last week, Instagram showed warnings around one million times per day, and around 50% of the time, the comment was either edited or deleted by the user post-warning, he said.

If people don’t feel safe, they’re not going to use IG, which means we have to keep working to prevent abuse. There are over 1B people on IG, which inevitably includes some racists. So we’re going to keep making changes and listening to feedback about what more we can do.

— Adam Mosseri 😷 (@mosseri) August 11, 2021

As for the creator side, Instagram is globally expanding Hidden Words, an autofilter that shunts DM requests with potentially offensive words, hashtags, and emojis into a separate folder, so creators don’t have to look at them if they don’t want to.

Hidden Words also catches potentially spammy and “low-quality” DMs, Mosseri said.

This feature will roll out to all users by the end of August.