A Wall Street Journal investigation found that TikTok’s recommendation algorithm can suss out new users’ interests in under two hours, and then drive them into ever-narrowing “rabbit holes” of content.

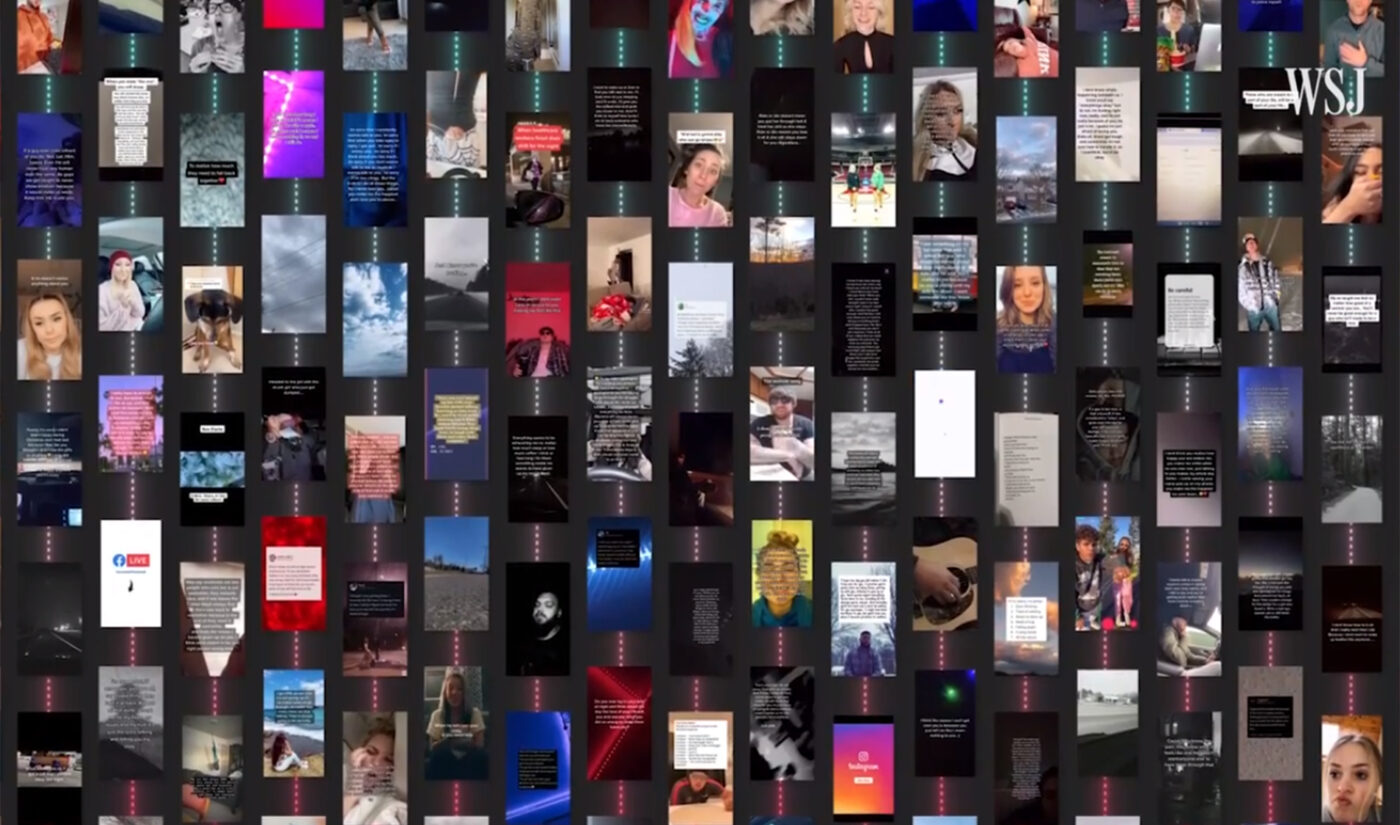

The investigation used 100 fresh TikTok accounts operated by bots that were programmed with specific interests like extreme sports, dance, astrology, or pets. Bots would linger over or rewatch videos that did meet their interest parameters, and swipe past content that didn’t (for example, a bot interested in dogs would not watch a video about pouring concrete).

Across the board, the bots–which, to be clear, gave TikTok no other information aside from a location and a birthdate–were first served a variety of popular videos.

Subscribe to get the latest creator news

These early videos had an average view count of 6.31 million each. As the bots indicated interests, TikTok began serving them more and more content that aligned with videos they appeared to like. Later videos, the WSJ found, had average view counts of 0.78 million, and were more likely to contain troubling content, or content that outright violated TikTok’s terms of service (like disordered eating, self-harm, and sexualization of minors).

What do we mean by “troubling content”?

Well, one bot in particular, called kentucky_96, was programmed to express an interest in content about sadness and depression. Three minutes and 15 videos into its TikTok journey, it watched a 35-second video about sadness twice. Thirty-three minutes later, it had been served a total of 224 videos, and its feed had turned into a “deluge of depressive content,” the WSJ says.

By that point, 93% of videos served to kentucky_96 were about depression and mental health struggles.

A TikTok spokesperson told the WSJ that the remaining 7% of videos were intended to help the user discover different content. They also said the study was not representative of real user behavior, because humans have diverse sets of interests.

The WSJ argues that even bots programmed with sets of interests were driven into rabbit holes that contained concerning content. One bot with a general interest in politics ended up being served election fraud and QAnon conspiracies, it says.

90-95% of TikTok’s views come from recommended content, Guillaume Chaslot says

Guillaume Chaslot, an engineer who helped build YouTube’s recommendation algorithm but now campaigns against its influence, told the WSJ that TikTok’s systems are “even worse” than YouTube’s.

“On YouTube, more than 70% of the views come from the recommendation engine, so it’s already huge,” he said. “But on TikTok, it’s even worse–it’s probably like 90-95% of the content that is seen that comes from the recommendation engine.”

This can be a problem, he said, because algorithms have one main purpose: to drive engagement.

In the case of kentucky_96, “Basically the algorithm is detecting that this depressing content is useful to create engagement, and pushes depressing content,” Chaslot said. “The algorithm is pushing people towards more and more extreme content, so it can push them toward more and more watch time.”

Chaslot said algorithms like YouTube and TikTok’s search for the piece of content that viewers are “vulnerable” to. “It doesn’t mean you really like it, or that’s the content you enjoy the most,” he said. “It’s just the content that’s most likely to make you stay on the platform.”

You can watch the WSJ’s full study here.