Back in September, leaked content moderation guidelines from TikTok showed the shortform video app and its China-based parent company, Bytedance, instructing moderators to remove videos about topics the Chinese government doesn’t approve of, such as Tinanmen Square and the fight for Tibetan independence.

At the time, TikTok and Bytedance explained the guidelines were part of its “early days,” and said that back then, “we took a blunt approach to minimizing conflict on the platform, and our moderation guidelines allowed penalties to be given for things like content that promoted conflict, such as between religious sects or ethnic groups.” It added that at some unspecified point, it recognized “this was not the correct approach,” and subsequently changed its rules.

Now, the platform has issued a very similar statement in response to more leaked moderation documents that show it suppressed content made by people it deemed at high risk of bullying, including disabled, fat, and LGBTQ+ people.

Subscribe to get the latest creator news

German digital rights blog Netzpolitik, which uncovered the documents and spoke to a person familiar with the matter to confirm they were actively used by moderators, reports that TikTok flagged these people as “special users” and capped the reach of all their videos, regardless of what their videos were about.

TikTok apparently suppressed users’ accounts in two main ways, restricting their videos either geographically or by number of views.

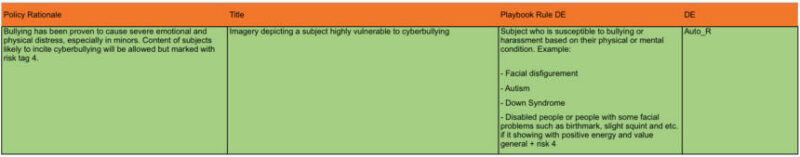

In a section of its moderation guidelines called ‘Imagery Depicting A Subject Highly Vulnerable To Cyberbullying,’ the platform instructs moderators to mark people “susceptible to harassment or cyberbullying based on their physical or mental condition” as ‘Risk 4,’ a level that restricts their content to only being viewed by users within their own countries. That’s the geographic level.

There’s also even stricter suppression level, ‘Auto R,’ which moderators are told to apply to users with facial disfigurement, autism, Down syndrome, and “disabled people or people with some facial problems such as birthmark, slight squint and etc.” Auto R prevents videos from showing up on users’ For You pages — which recommend rising star videos they might be interested in — after they’ve reached a certain number of views. (Netzpolitik mentions caps of 6,000 and 10,000 views, but it’s not clear if those are the actual caps or just example viewcounts.)

Interestingly, the Auto R instructions note that if a disabled person or person with a disfigurement is “showing with positive energy and value,” they should be labeled Risk 4 rather than Auto R.

A screencap of the Auto R guidelines obtained by Neopolitik

The bottom line is that despite apparently being formulated with protective intentions, these guidelines punish marginalized people rather than the people who might target them.

A TikTok spokesperson told Netzpolitik that these guidelines were used “at the beginning” of TikTok to counteract bullying.

“This approach was never intended to be a long-term solution, and although we had a good intention, we realized that it was not the right approach,” they said, adding that the rules Netzpolitik got ahold of were changed. However, Netzpolitik reports the documents it obtained show these guidelines were in place as recently as September 2019 — which is, again, when TikTok came under fire for its other moderation policies, and again claimed they were old policies that had been changed.

TikTok would not share information about the moderation policy that replaced this one.