Facebook is expanding its ban on content promoting white supremacy to include content that supports white nationalism and white separatism.

The decision comes in the wake of the Christchurch massacre, where a white supremacist killed 50 mosquegoers. Following the shooting, social media users called for Facebook and fellow platforms like YouTube and Twitter to more stringently monitor hate content at all times — not just in response to tragedies.

“Our policies have long prohibited hateful treatment of people […] and that has always included white supremacy,” Facebook said in a company blog post. “We didn’t originally apply the same rationale to expressions of white nationalism and white separatism because we were thinking about broader concepts of nationalism and separatism.”

Subscribe to get the latest creator news

Those “broader concepts” include things like American pride and Basque nationalism (a movement to establish an independent nation for the Basques, a people indigenous to the Pyrenees mountains between France and Spain).

But Facebook has realized “that white nationalism and white separatism cannot be meaningfully separated from white supremacy and organized hate groups,” it said. For those who don’t know: white supremacists believe white people are superior to people of color; white nationalists believe white people should have their own national identity, that people of color should be a minority population, and that multiculturism is a threat to white people’s continued existence; and white separatists believe in establishing an all-white ethnostate by removing people of color or moving away from them.

Facebook added that it conducted a review of hate figures and organizations and found a significant “overlap” between content supporting white nationalism, white separatism, and white supremacy.

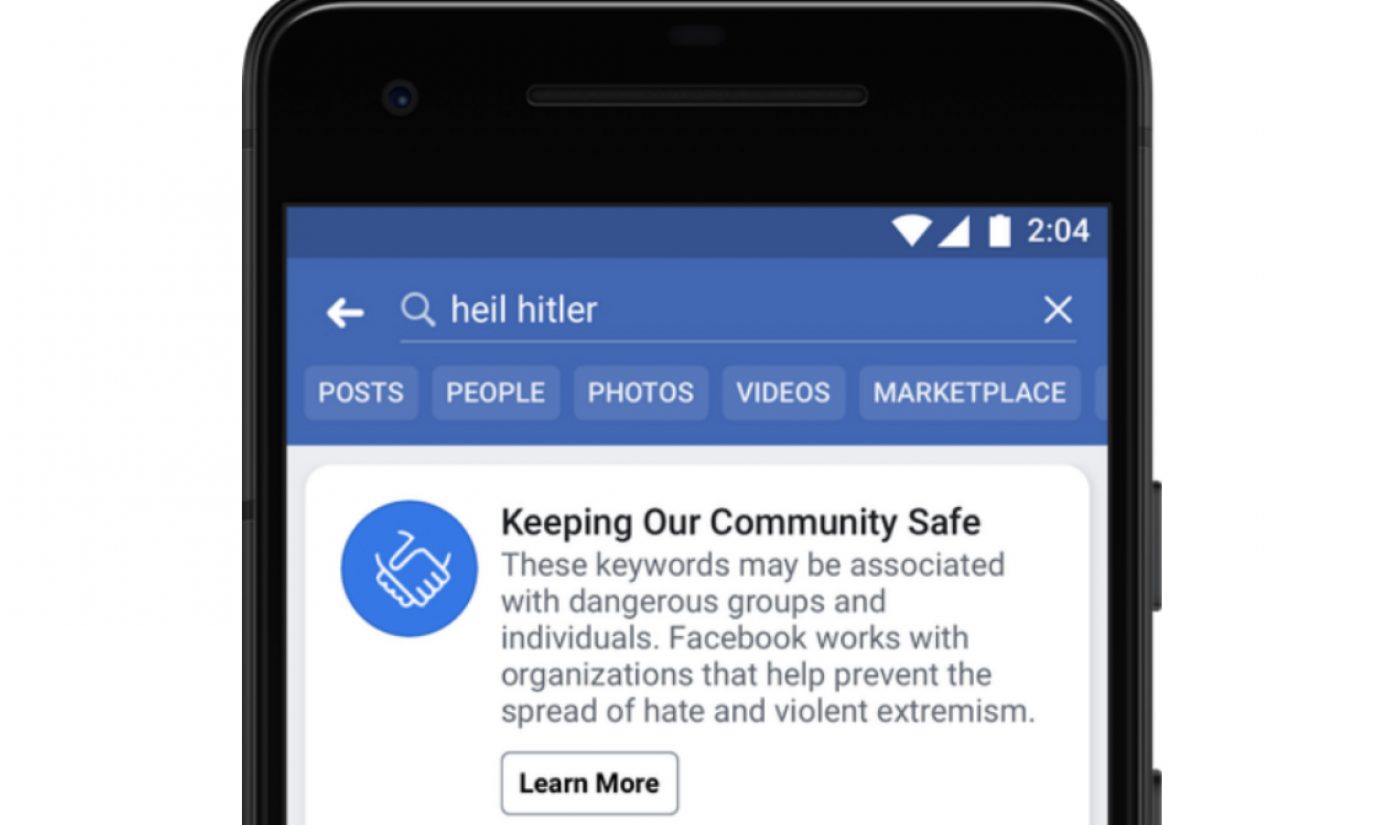

So, going forward, Facebook (and subsidiary Instagram) will remove content that praises, supports, or represents any of the three. It will also direct people who search for terms associated with white supremacy to the organization Life After Hate, which was founded by former members of extremist hate groups and now offers crisis intervention, education, and support groups for those who want to leave hate groups and unlearn toxic beliefs.

Facebook also said it’s aware that it “need[s] to get faster and better at finding and removing hate from our platforms.” Following the Christchurch massacre, during which the shooter livestreamed 17 minutes of footage on Facebook, the platform was criticized for taking half an hour to remove the video, and for not removing subsequent reuploads quickly enough. (It’s also facing a lawsuit, filed this week, from a Muslim advocacy group that stems from the speed of its response.)

Right now, Facebook uses machine learning and artificial intelligence (AI) to find hate content — something it began doing in fall 2018. It didn’t offer any concrete steps it’s taking to speed up or improve the response of its AI, but acknowledged “we have a lot more work to do.”

“Unfortunately, there will always be people who try to game our systems to spread hate,” the platform concluded. “Our challenge is to stay ahead by continuing to improve our technologies, evolve our policies, and work with experts who can bolster our efforts.”