Despite YouTube’s efforts over the past three months, the platform’s “wormhole” of videos and comments fetishizing young children is still thriving.

In mid-February, YouTuber Matt Watson posted a lengthy video detailing what he called a “wormhole” of predatory comments being left on YouTube videos of young children. Many of those videos appeared to have been stolen from genuine accounts where parents and children shared videos, then reuploaded by accounts with random English or Cyrillic usernames, a number of which had uploaded multiple videos of different children.

Following Watson’s video, and amid the exit of advertisers en masse, YouTube took a number of steps to buff up child safety on the platform. It introduced a new algorithm it claimed was twice as effective at hunting down and removing predatory comments. It disabled comments wholesale on tens of millions of videos showing minors. And, controversially, it stripped the ability to receive comments off thousands of seemingly otherwise normal, healthy channels simply because they regularly upload videos showing kids.

Subscribe for daily Tubefilter Top Stories

But a New York Times report published today, about a Brazilian woman whose daughter’s innocent home video recently ended up in the wormhole being watched by hundreds of thousands of people, seems to indicate the problem is still ongoing.

When Watson described a “wormhole” back in February, what he meant (and showed in his video) was this: If a viewer clicked on a video of a prepubescent child in a bathing suit or gymnastics leotard, YouTube’s ‘Up Next’ bar filled with more videos of a similar nature. Once a viewer watched just one of those videos, YouTube would recommend dozens more, and those recommendations would spread from the Up Next bar to the user’s homepage.

The woman the Times interviewed, Christiane C., said her 10-year-old daughter uploaded a video of herself and her friend playing in their backyard pool. She got an inkling something was off when the video amassed 400,000 views in just a few days. The view count was coming from the wormhole. Months after YouTube had begun tackling the issues surrounding videos of kids, Christiane’s daughter’s video had been recommended alongside other videos showing underage kids wearing bathing suits and gymnastics leotards.

In addition to Christiane, the Times spoke with experts from Harvard’s Berkman Klein Center for Internet and Society. Harvard’s researchers, who recently set out to study how YouTube’s algorithm recommended videos to people in Brazil, told the Times they stumbled upon the wormhole, and it became a focus of their study. While YouTube obviously didn’t set out to curate content for pedophiles, what its recommendation algorithm is doing is tantamount to automatically putting together a “catalog” of videos that sexually exploit children, they said.

The researchers also confirmed what Watson found back in February: viewers don’t need to actively search for videos of young children to end up in the wormhole. Watson got into the wormhole after clicking on a bikini haul video from an adult creator. A video of a bathing suit-clad young girl was in the Up Next bar.

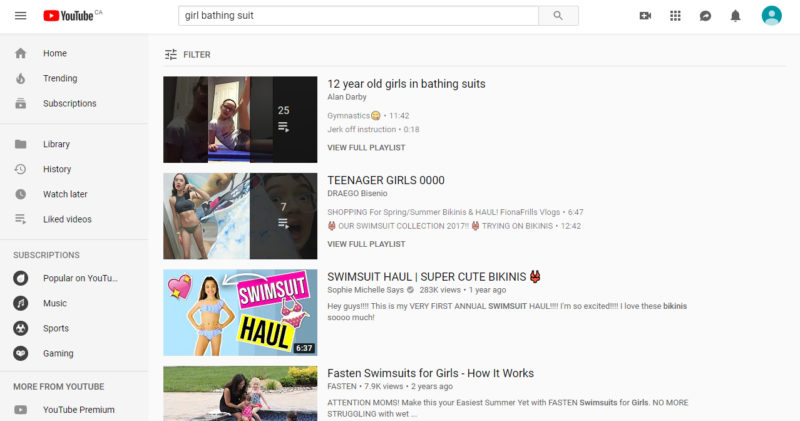

We conducted our own verification tests when we reported Watson’s findings. Today, to see how extensive the presence of fetishizing videos and comments still is, we created a fresh Google account and opened YouTube in an incognito browser window. Then we searched “girl bathing suit.”

The first result that popped up was a playlist called 12 year old girls in bathing suits. The second result was a playlist called Teenager Girls 0000.

We focused on the first playlist. It has 25 videos — most of which appear to have been stolen — that show prepubescent girls in bikinis and doing gymnastics. One video in the playlist is called Jerk Off Instruction. It features a short clip of an older man apparently jokingly telling viewers they should be ashamed of themselves. In reply to a comment on that video, the uploader says he doesn’t know why his video would be included in such a playlist, and urges the commenter to report the playlist.

When we clicked on videos within the playlist, most of them had comments removed. But many videos in Up Next recommendations branching off from those videos did not. As we found in February, most of the videos come from outside the U.S., with titles and comments in Portuguese and Russian. Many of the comments, when translated, are similar to those we found in February, with users telling the young girls in the videos (who, again, oftentimes are not the actual uploaders) that they’re desirable.

We found one channel, Teen Model, which has been uploading videos containing photos of partially-clothed underage girls for more than a month. Its videos have collectively received more than 691,000 views. Comments are enabled on the channel, and every one of the videos we clicked on had at least one inappropriate comment. Some users left subtler remarks calling the girls “nice,” and “beautiful princesses.” Others were not as subtle, with one blatantly stating, “You can talk about pedophilia…but there’s no way you can resist a new one.”

Tubefilter reached out to YouTube about the videos and comments we saw. YouTube linked us to its recently-published blog post on child and family safety (below) and said it had no additional comments.

Commenters on the Teen Model channel also engaged in “timestamping,” something Watson called attention to. Timestamping involves leaving a comment that guides readers to a particular spot in the video. On videos like these, those timestamps are meant to recommend moments the commenter found attractive.

Before the Times published its piece, it notified YouTube that the issues from February were ongoing. After being notified, YouTube removed some of the videos the Times was keeping an eye on, but left “many others” uploaded, the Times reports.

“Protecting kids is at the top of our list,” Jennifer O’Connor, YouTube’s product director for trust and safety, told the Times. She said that since February, YouTube has constantly been working to increase child safety.

But O’Connor denied that YouTube’s recommendation algorithm is keeping the wormhole going. “It’s not clear to us that necessarily our recommendation engine takes you in one direction or another,” she said. However, she added that, “when it comes to kids, we just want to take a much more conservative stance for what we recommend.”

YouTube told the Times that as a result of its inquiries, the platform plans to “limit” recommendations on videos of young children. It will not entirely turn off the recommendation algorithm on such videos because recommendations drive 70% of total views, it said.

We asked YouTube about what limiting recommendations exactly entails. YouTube declined to comment further.

We have also reported all of videos and comments found while working on this story.

Update, June 3, 3:30 p.m.

YouTube has posted a blog post updating readers on its “efforts to protect minors and families.” In the post, it says that during the first quarter of 2019, it removed more than 800,000 videos for violations of its child safety policies. Most of those videos were removed before they had 10 views, it adds.

Also in the post, which does not directly reference The New York Times but does call out “recent news reports,” YouTube says, “The vast majority of videos featuring minors on YouTube, including those referenced in recent news reports, do not violate our policies and are innocently posted — a family creator providing educational tips, or a parent sharing a proud moment. But when it comes to kids, we take an extra cautious approach towards our enforcement and we’re always making improvements to our protections.”